Dear friends,

Machine learning development is an empirical process. It’s hard to know in advance the result of a hyperparameter choice, dataset, or prompt to a large language model (LLM). You just have to try it, get a result, and decide on the next step. Still, understanding how the underlying technology works is very helpful for picking a promising direction. For example, when prompting an LLM, which of the following is more effective?

Prompt 1: [Problem/question description] State the answer and then explain your reasoning.

Prompt 2: [Problem/question description] Explain your reasoning and then state the answer.

These two prompts are nearly identical, and the former matches the wording of many university exams. But the second prompt is much more likely to get an LLM to give you a good answer. Here’s why: An LLM generates output by repeatedly guessing the most likely next word (or token). So if you ask it to start by stating the answer, as in the first prompt, it will take a stab at guessing the answer and then try to justify what might be an incorrect guess. In contrast, prompt 2 directs it to think things through before it reaches a conclusion. This principle also explains the effectiveness of widely discussed prompts such as “Let’s think step by step.”

The image above illustrates this difference using a question with one right answer. But similar considerations apply when asking an LLM to make judgment calls when there is no single right answer; for example, how to phrase an email, what to say to someone who is upset, or the proper department to route a customer email to.

That’s why it’s helpful to understand, in depth, how an algorithm works. And that means more than memorizing specific words to include in prompts or studying API calls. These algorithms are complex, and it’s hard to know all the details. Fortunately, there’s no need to. After all, you don’t need to be an expert in GPU compute allocation algorithms to use LLMs. But digging one or two layers deeper than the API documentation to understand how key pieces of the technology work will shape your insights. For example, in the past week, knowing how long-context transformer networks process input prompts and how tokenizers turn an input into tokens shaped how I used them.

A deep understanding of technology is especially helpful when the technology is still maturing. Most of us can get a mature technology like GPS to perform well without knowing much about how it works. But LLMs are still an immature technology, and thus your prompts can have non-intuitive effects. Developers who understand the technology in depth are likely to build more effective applications, and build them faster and more easily, than those who don't. Technical depth also helps you to decide when you can’t tell what’s likely to work in advance, and when the best approach is to try a handful of promising prompts, get a result, and keep iterating.

Keep learning!

Andrew

P.S. Our short course on fine-tuning LLMs is now available! As I wrote last week, many developers are not only prompting LLMs but also fine-tuning them — that is, taking a pretrained model and training it further on their own data. Fine-tuning can deliver superior results, and it can be done relatively inexpensively. In this course, Sharon Zhou, CEO and co-founder of Lamini (disclosure: I’m a minor shareholder) shows you how to recognize when fine-tuning can be helpful and how to do it with an open-source LLM. Learn to fine-tune your own models here.

News

News Outlet Challenges AI Developers

The New York Times launched a multi-pronged attack on the use of its work in training datasets.

What’s new: The company updated its terms of service to forbid use of its web content and other data for training AI systems, Adweek reported. It’s also exploring a lawsuit against OpenAI for unauthorized use of its intellectual property, according to NPR. Meanwhile, The New York Times backed out of a consortium of publishers that would push for payment from AI companies.

From negotiation to mandate: The 173-year-old publisher, which has nearly 10 million subscribers across online and print formats, was negotiating with OpenAI to use its material, but talks recently broke down. The New York Times had more success with Google: In February, Google agreed to pay around $100 million to use Times content in search results, although an agreement on AI training was not reported.

- The updated The New York Times terms of service prohibit visitors from using text, images, video, audio, or metadata to develop software or curate third-party datasets without explicit permission. The prohibition on software development explicitly includes training machine learning or AI systems. (The terms of service previously prohibited the use of web crawlers to scrape the publisher’s data without prior consent.)

- People with knowledge of the potential lawsuit said The New York Times worried that readers could get its reporting directly from ChatGPT.

- It’s unclear whether existing United States copyright law protects against AI training. If a judge were to rule in favor of The New York Times, OpenAI might have to pay up to $150,000 per instance of copyright infringement and possibly destroy datasets that contain related works. OpenAI might defend itself by claiming fair use, a vague legal standard that requires a judge’s decision to determine.

Behind the news: Earlier this month, 10 press and media organizations including Agence France-Presse, Associated Press, and stock media provider Getty Images signed an open letter that urges regulators to place certain restrictions on AI developers. The letter calls for disclosure of training datasets, labeling of model outputs as AI-generated, and obtaining consent of copyright holders before training a model on their intellectual property. The letter followed several ongoing lawsuits that accuse AI developers of appropriating data without proper permission or compensation.

Why it matters: Large machine learning models rely on training data scraped from the web as well as other freely available sources. Text on the web is sufficiently plentiful that losing a handful of sources may not affect the quality of trained models. However, if the norms were to shift around using scraped data to train machine learning models in ways that significantly reduced the supply of high-quality data, the capabilities of trained models would suffer.

We’re thinking: Society reaps enormous rewards when people are able to learn freely. Similarly, we stand to gain incalculable benefits by allowing AI to learn from information available on the web. An interpretation of copyright law that blocks such learning would hurt society and derail innovation. It’s long past time to rethink copyright for the age of AI.

Defcon Contest Highlights AI Security

Hackers attacked AI models in a large-scale competition to discover vulnerabilities.

What’s new: At the annual Defcon hacker convention in Las Vegas, 2,200 people competed to break guardrails around language models, The New York Times reported. The contest, which was organized by AI safety nonprofits Humane Intelligence and SeedAI and sponsored by the White House and several tech companies, offered winners an Nvidia RTX A6000 graphics card.

Breaking models: Contestants in the Generative Red Team Challenge had 50 minutes to perform 21 tasks of varying difficulty, which they selected from a board like that of the game show Jeopardy. Seven judges scored their submissions.

- Anthropic, Cohere, Google, Hugging Face, Meta, Nvidia, OpenAI, and Stability AI provided large language models for competitors to poke and prod.

- Among the flaws discovered: inconsistencies in language translations, discrimination against a job candidate based on caste, and a reference to a nonexistent 28th amendment to the United States Constitution.

- Two of the four winning scores were achieved by Stanford computer science Cody Ho, who entered the contest five times.

- The organizers plan to release the contestants’ prompts and model outputs to researchers in September 2023 and a public dataset in August 2024.

Behind the news: Large AI developers often test their systems by hiring hackers called “red teams,” a term used by the United States military to represent enemy forces in Cold War-era war games, to attack them.

- Google shed light on its red team in a July blog post. Members attempt to manipulate Google’s models into outputting data not intended by its developers, eliciting harmful or biased results, revealing training data, and the like.

- Microsoft also recently featured its red team. The team, which started in 2018, probes models available on the company’s Azure cloud service.

- OpenAI hired a red team of external researchers to evaluate the safety of GPT-4. They coaxed the model to produce chemical weapon recipes, made-up words in Farsi, and racial stereotypes before developers fine-tuned the model to avoid such behavior.

Why it matters: The security flaws found in generative AI systems are distinctly different from those in other types of software. Enlisting hackers to attack systems in development is essential in sniffing out flaws in conventional software. It’s a good bet for discovering deficiencies in AI models as well.

We’re thinking: Defcon attracts many of the world’s most talented hackers — people who have tricked ATMs into dispensing cash and taken over automobile control software. We feel safer knowing that this crowd is on our side.

A MESSAGE FROM DEEPLEARNING.AI

Join "Finetuning Large Language Models," a new short course that teaches you how to finetune open source models on your own data. Enroll today and get started

AI Chip Challenger Gains Traction

An upstart supplier of AI chips secured a major customer.

What’s new: Cerebras, which competes with Nvidia in hardware for training large models, signed a $100 million contract with Abu Dhabi tech conglomerate G42. The deal is the first part of a multi-stage plan to build a network of supercomputers.

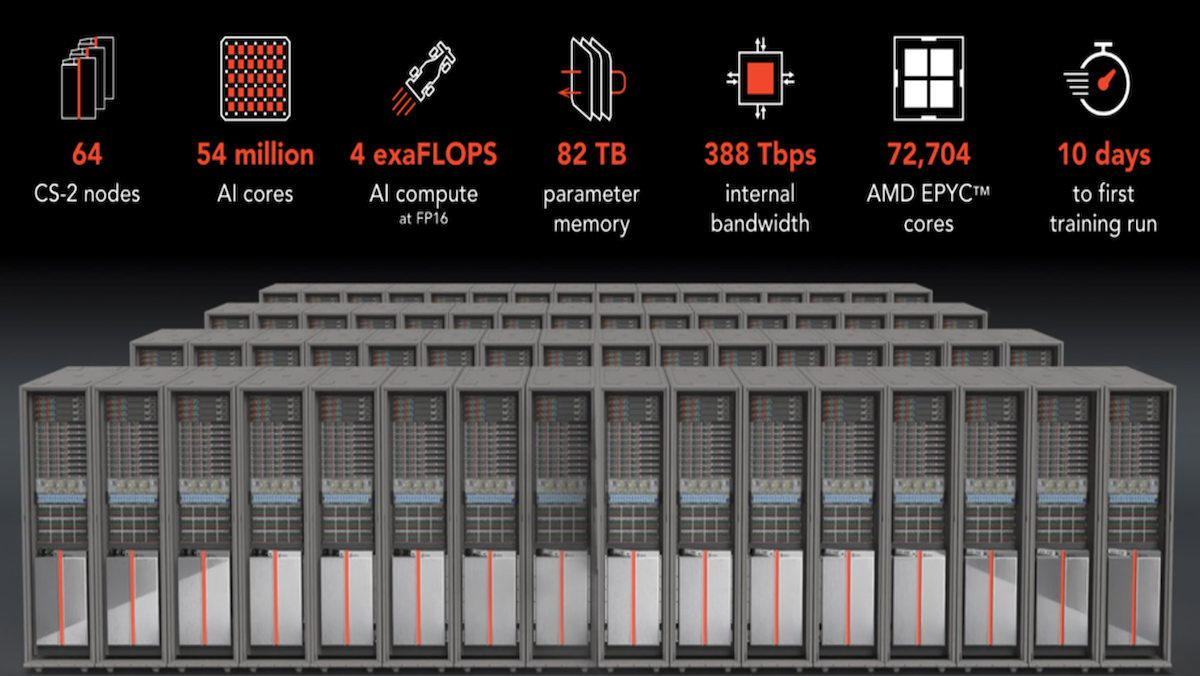

How it works: The deal covers the first three of nine proposed systems. The first, Condor Galaxy 1 (CG-1), is already up and running in Santa Clara, California. CG-2 and CG-3 are slated to open in early 2024 in Austin, Texas and Asheville, North Carolina. Cerebras and G42 are in talks to build six more by the end of 2024. G42 plans to use the network to supply processing power primarily to healthcare and energy companies

- The systems are based on Cerebras’ flagship chip, which is designed to overcome communication bottlenecks between separate AI and memory chips by packing computing resources onto a single giant chip. Each chip fills an entire silicon wafer, which is typically divided into smaller chips. It holds 2.6 trillion transistors organized into 850,000 cores, compared to an Nvidia H100 GPU, which has 80 billion transistors and around 19,000 cores.

- CG-1 comprises 32 Cerebras chips (soon to be upgraded to 64), which process AI operations, as well as 82 terabytes of memory. Over 72,700 AMD EPYC cores handle input and output processing.

- Each supercomputer will run at 4 exaflops (4 quintillion floating point operations per second) peak performance. In comparison, Google’s Cloud TPU v4 Pods deliver 1.1 exaflops.

- The architecture enables processing to be distributed among all the chips with minimal loss of efficiency.

Behind the news: Nvidia accounts for 95 percent of the market for GPUs used in machine learning — a formidable competitor to Cerebras and other vendors of AI chips. Despite Nvidia’s position, though, there are signs that it’s not invincible.

- Nvidia has struggled to keep up with the surge in demand brought on by generative AI.

- Google and Amazon design their own AI chips, making them available to customers through their cloud platforms. Meta and Microsoft have announced plans to design their own as well.

Why it matters: The rapid adoption of generative AI is fueling demand for the huge amounts of processing power required to train and run state-of-the-art models. In practical terms, Nvidia is the only supplier of tried-and-true AI chips for large-scale systems. This creates a risk for customers who need access to processing power and an opportunity for competitors who can satisfy some of that demand.

We’re thinking: As great as Nvidia’s products are, a monopoly in AI chips is not in anyone’s best interest. Cerebras offers an alternative for training very large models. Now cloud-computing customers can put it to the test.

Vision Transformers Made Manageable

Vision transformers typically process images in patches of fixed size. Smaller patches yield higher accuracy but require more computation. A new training method lets AI engineers adjust the tradeoff.

What's new: Lucas Beyer and colleagues at Google Research trained FlexiViT, a vision transformer that allows users to specify the desired patch size.

Key insight: Vision transformers turn each patch into a token using two matrices of weights, whose values describe the patch’s position and appearance. The dimensions of these matrices depend on patch size. Resizing the matrices enables a transformer to use patches of arbitrary size.

How it works: The authors trained a standard vision transformer on patches of random sizes between 8x8 and 48x48 pixels. They trained it to classify ImageNet-21K (256x256 pixels).

- FlexiVit learned a matrix of size 32x32 to describe each patch’s appearance and a matrix of size 7x7 to describe its position.

- Given an image, FlexiViT resized the matrices according to the desired patch size without otherwise changing the architecture. To accomplish this, the authors developed a complicated method they call pseudo-inverse resize (PI resize).

Results: The authors compared FlexiVit to two vanilla vision transformers, ViT-B/16 and ViT-B/30, trained on ImageNet-21k using patch sizes of 16x16 and 30x30 respectively. Given patches of various sizes, the vanilla vision transformers’ position and appearance matrices adjusted in the same manner as FlexiViT’s. FlexiViT performed consistently well across patch sizes, while the models trained on a fixed patch size performed well only with that size. For example, given 8x8 patches, FlexiViT achieved 50.2 percent precision; ViT-B/16 achieved 30.5 percent precision, and ViT-B/30 achieved 2.9 percent precision. Given 30x30 patches, FlexiViT achieved 46.6 percent precision, ViT-B/16 achieved 2.4 percent precision, and ViT-B/30 achieved 47.1 percent precision.

Why it matters: The processing power available often depends on the project. This approach makes it possible to train a single vision transformer and tailor its patch size to accommodate the computation budget at inference.

We're thinking: Unlike text transformers, for which turning text into a sequence of tokens is relatively straightforward, vision transformers offer many possibilities for turning an image into patches and patches into tokens. It’s exciting to see continued innovation in this area.

Data Points

Supermarket recipe bot produced a dangerous cooking formula

New Zealand-based supermarket chain Pak‘nSave introduced a bot to generate recipes from leftover ingredients. A user requested a recipe that included the dangerous combination of water, ammonia, and bleach, and the bot complied. The company responded by putting safeguards in place to prevent misuse. (Gizmodo)

California firefighters harness AI to combat wildfires

The ALERTCalifornia program is aiding California firefighters by using over 1,000 cameras to feed video data into an AI system that identifies potential wildfires and alerts first responders, ensuring rapid action. The platform, operational since July, already demonstrated its effectiveness by detecting fires in remote locations. (Reuters)

Tutoring firm settles US lawsuit over AI bias

China's iTutorGroup Inc agreed to resolve a lawsuit brought by the U.S. Equal Employment Opportunity Commission (EEOC). The U.S. alleged that iTutorGroup unlawfully used AI to discriminate against older job seekers. The company agreed to pay $365,000 to over 200 applicants. (Reuters)

U.S. legislators establish AI working group

Members of the Democratic party in the U.S. House of Representatives formed a working group to focus on AI regulations. The group aims to collaborate with the Biden administration, companies, and fellow legislators to formulate bipartisan policies concerning AI. (Reuters)

Skydio shifts from consumer drones to enterprise and public sector

The company, known for its consumer drones, exited the market and will focus on growing its enterprise and public-sector business. However, the company hasn't ruled out a return to the consumer drone space in the future. (IEEE Spectrum)

School district uses ChatGPT to decide which books to remove from libraries

In response to state legislation requiring books to be age-appropriate, the Mason City Community School District in Iowa used ChatGPT to assess its catalog. Administrators decided that consulting with the chatbot was the simplest way to comply with the requirements efficiently, despite concerns about its accuracy and consistency. (Popular Science)

San Francisco approves round-the-clock robotaxi operations

The California Public Utilities Commission granted approval to Waymo and Cruise robotaxis to operate throughout San Francisco at all hours. Shortly after the approval was announced, a driverless Cruise vehicle drove into a city paving project and became stuck in wet concrete. (AP and The New York Times)

Gartner’s 2023 Hype Cycle elevates generative AI to 'Peak of Inflated Expectations'

The Gartner Hype Cycle, which interprets the progress of tech trends, placed generative AI on the position of the Peak of Inflated Expectations. The market research firm attributed this designation to the proliferation of products claiming generative AI integration and the discrepancy between vendors' claims and real-world benefits. The next milestone in the Hype Cycle is the 'Plateau of Productivity.' (Venture Beat)

Research: OpenAI apparently conceals use of copyrighted material in ChatGPT trainingResearchers found that ChatGPT avoids responses that include verbatim excepts from copyrighted material, behavior that wasn’t observed in earlier versions of the chatbot. Nonetheless, they were able to devise prompts that caused the model to output such excerpts. (Business Insider)