Dear friends,

Prompt-based development is making the machine learning development cycle much faster: Projects that used to take months now may take days. I wrote in an earlier letter that this rapid development is causing developers to do away with test sets.

The speed of prompt-based development is also changing the process of scoping projects. In lieu of careful planning, it’s increasingly viable to throw a lot of projects at the wall to see what sticks, because each throw is inexpensive.

Specifically, if building a system took 6 months, it would make sense for product managers and business teams to plan the process carefully and proceed only if the investment seemed worthwhile. But if building something takes only 1 day, then it makes sense to just build it and see if it succeeds, and discard it if it doesn’t. The low cost of trying an idea also means teams can try out a lot more ideas in parallel.

Say you’re in charge of building a natural language processing system to process inbound customer-service emails, and a teammate wants to track customer sentiment over time. Before the era of large pre-trained text transformers, this project might involve labeling thousands of examples, training and iterating on a model for weeks, and then setting up a custom inference server to make predictions. Given the effort involved, before you started building, you might also want to increase confidence in the investment by having a product manager spend a few days designing the sentiment display dashboard and verifying whether users found it valuable.

But if a proof of concept for this project can be built in a day by prompting a large language model, then, rather than spending days/weeks planning the project, it makes more sense to just build it. Then you can quickly test technical feasibility (by seeing if your system generates accurate labels) and business feasibility (by seeing if the output is valuable to users). If it turns out to be either technically too challenging or unhelpful to users, the feedback can help you improve the concept or discard it.

I find this workflow exciting because, in addition to increasing the speed of iteration for individual projects, it significantly increases the volume of ideas we can try. In addition to plotting the sentiment of customer emails, why not experiment with automatically routing emails to the right department, providing a brief summary of each email to managers, clustering emails to help spot trends, and many more creative ideas? Instead of planning and executing one machine learning feature, it’s increasingly possible to build many, quickly check if they look good, ship them to users if so, and get rapid feedback to drive the next step of decision making.

One important caveat: As I mentioned in the letter about eliminating test sets, we shouldn’t let the speed of iteration lead us to forgo responsible AI. It’s fantastic that we can ship quick-and-dirty applications. But if there is risk of nontrivial harm such as bias, unfairness, privacy violation, or malevolent uses that outweigh beneficial uses, we have a responsibility to evaluate our systems’ performance carefully and ensure that they’re safe before we deploy them widely.

What ideas do you have for prompt-based applications? If you brainstorm a few different ways such applications could be useful to you or your company, I hope you’ll implement many of them (safely and responsibly) and see if some can add value!

Keep learning,

Andrew

P.S. We just announced a new short course today, LangChain: Chat with Your Data, built in collaboration with Harrison Chase, creator of the open-source LangChain framework. In this course, you’ll learn how to build one of the most-requested LLM-based applications: Answering questions based on information in a document or collection of documents. This one-hour course teaches you how to do that using retrieval augmented generation (RAG). It also covers how to use vector stores and embeddings to retrieve document chunks relevant to a query.

News

The Secret Life of Data Labelers

The business of supplying labeled data for building AI systems is a global industry. But the people who do the labeling face challenges that impinge on the quality of both their work and their lives.

What’s new: The Verge interviewed more than two dozen data annotators, revealing a difficult, precarious gig economy. Workers often find themselves jaded by low pay, uncertain schedules, escalating complexity, and deep secrecy about what they’re doing and why.

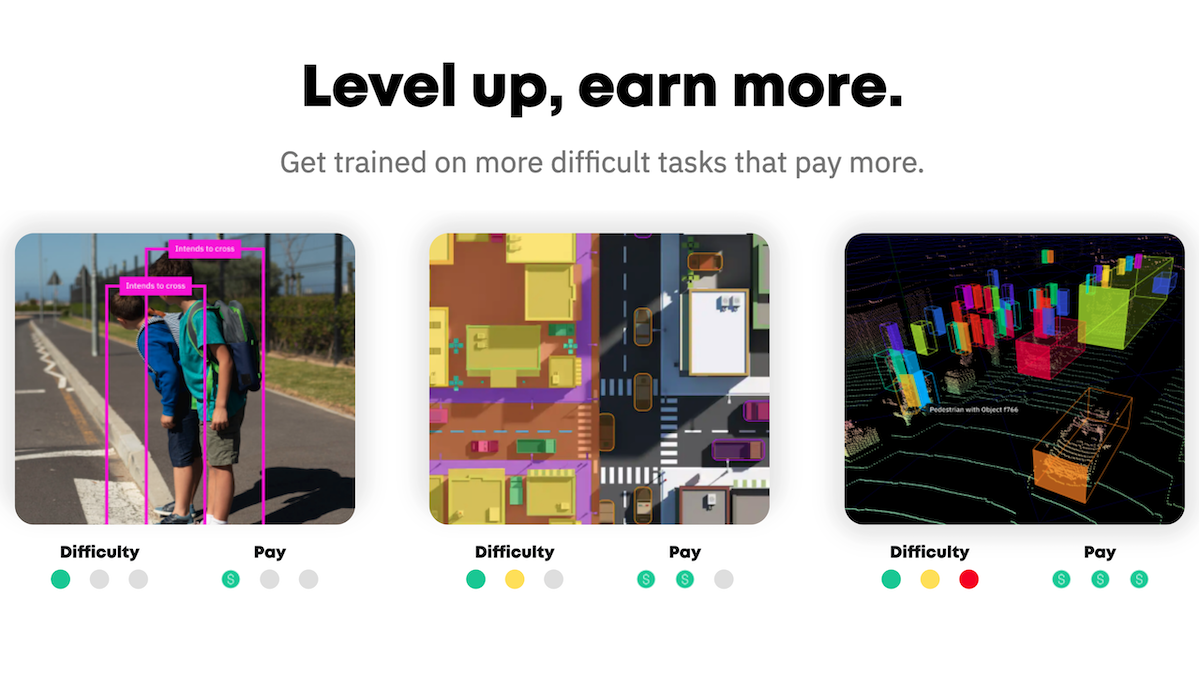

How it works: Companies that provide labeling services including Centaur Labs, Surge AI, and Remotasks (a division of data supplier Scale AI) use automated systems to manage gig workers worldwide. Workers undergo qualification exams, training, and performance monitoring to perform tasks like drawing bounding boxes, classifying sentiments expressed by social media posts, evaluating video clips for sexual content, sorting credit-card transactions, rating chatbot responses, and uploading selfies of various facial expressions.

- The pay scale varies widely, depending on the worker’s location and the task assigned, from $1 per hour in Kenya to $25 per hour or more in the U.S. Some tasks that require specialized knowledge, sound judgment, and/or extensive labor can pay up to $300 per task.

- To protect their clients’ trade secrets, employers dole out assignments without identifying the client, application, or function. Workers don’t know the purpose of the labels they’re called upon to produce, and they’re warned against talking about their work.

- The assignments often begin with ambiguous instructions. They may call for, say, labeling actual clothing that might be worn by a human being, so clothes in a photo of a toy doll or a cartoon drawing clearly don’t qualify. But do images of clothing reflected in a mirror? And does a suit of armor count as clothing? How about swimming fins? As developers iterate on their models, rules that govern how the data should be labeled become more elaborate, forcing labelers to keep in mind a growing variety of exceptions and special cases. Workers who make too many mistakes may lose the gig.

- Work schedules are sporadic and unpredictable. Workers don’t know when the next assignment will arise or how long it will last, whether the next gig will be interesting or soul-crushing, or whether it will pay well or poorly. Such uncertainty — and differential between their wages and their employers’ revenue as reported in the press — can leave workers demoralized.

- Many labelers manage the stress by gathering in clandestine groups on WhatsApp to share information and seek advice about how to find good gigs and avoid undesirable ones. There, they learn tricks like using existing AI models to do the work, connecting through proxy servers to disguise their locations and maintaining multiple accounts as a hedge against suspension for getting caught breaking rules.

What they’re saying: “AI doesn’t replace work. But it does change how work is organized.” —Erik Duhaime, CEO, Centaur Labs

Behind the news: Stanford computer scientist Fei-Fei Li was an early pioneer in crowdsourcing data annotations. In 2007, she led a team at Princeton to scale the number of images used to train an image recognizer from tens of thousands to millions. To get the work done, the team hired thousands of workers via Amazon’s Mechanical Turk platform. The result was ImageNet, a key computer vision dataset.

Why it matters: Developing high-performance AI systems depends on accurately annotated data. Yet the harsh economics of annotating at scale encourages service providers to automate the work and workers to either cut corners or drop out. Notwithstanding recent improvements — for instance, Google raised its base wage for contractors who evaluate search results and ads to $15 per hour — everyone would benefit from treating data annotation less like gig work and more like a profession.

We’re thinking: The value of skilled annotators becomes even more apparent as AI practitioners adopt data-centric development practices that make it possible to build effective systems with relatively few examples. With far fewer examples, selecting and annotating them properly is absolutely critical.

Making Government Multilingual

An app is bridging the language gap between the Indian government and its citizens, who speak a wide variety of languages.

What’s new: Jugalbandi helps Indians learn about government services, which typically are described online in English and Hindi, in their native tongues. The project is a collaboration between Microsoft and open-source developers AI4Bharat and OpenNyAI.

How it works: Jugalbandi harnesses an unspecified GPT model from the Microsoft Azure cloud service and models from AI4Bharat, a government-backed organization that provides open-source models and datasets for South Asian languages. As of May, the system covered 10 of India’s 22 official languages (out of more than 120 that are spoken there) and over 170 of the Indian government’s 20,000 programs.

- Users send text or voice messages to a WhatsApp number associated with Jugalbandi. The system transcribes voice messages into text using the speech recognition model IndicWav2Vec. Then it translates the text into English using IndicTrans.

- Jugalbandi queries documents for information relevant to the user’s request using the Retrieval Augmented Generation model and generates responses using an unspecified OpenAI model. IndicTrans translates the answer into the user’s language, and one of AI4Bharat’s Indic text-to-speech models renders voice output for users who submitted their queries by voice.

Behind the news: While language models are helping citizens understand their governments, they’re also helping governments understand their citizens. In March, Romania launched ION, an AI system that scans social media comments on government officials and policy and summarizes them for ministers to read.

Why it matters: India is a highly multilingual society, and around a quarter of its 1.4 billion residents are illiterate. Consequently, many people in India struggle to receive government benefits and interact with central authorities. This approach may enable Indians to use their own language via WhatsApp, which has more than 400 million users in that country.

We’re thinking: In February, Microsoft researchers showed that large language models are approaching state-of-the-art results in machine translation. Indeed, machine translation is headed toward a revolution as models like GPT 3.5 (used in the study) and GPT-4 (which is even better) make translations considerably easier and more accurate.

A MESSAGE FROM DEEPLEARNING.AI

Chatting with data is a highly valuable use case for large language models. In this short course, you’ll use the open source LangChain framework to build a chatbot that interacts with your business or personal data. Enroll in "LangChain: Chat with Your Data” today for free!

Letting Chatbots See Your Data

A new coding framework lets you pipe your own data into large language models.

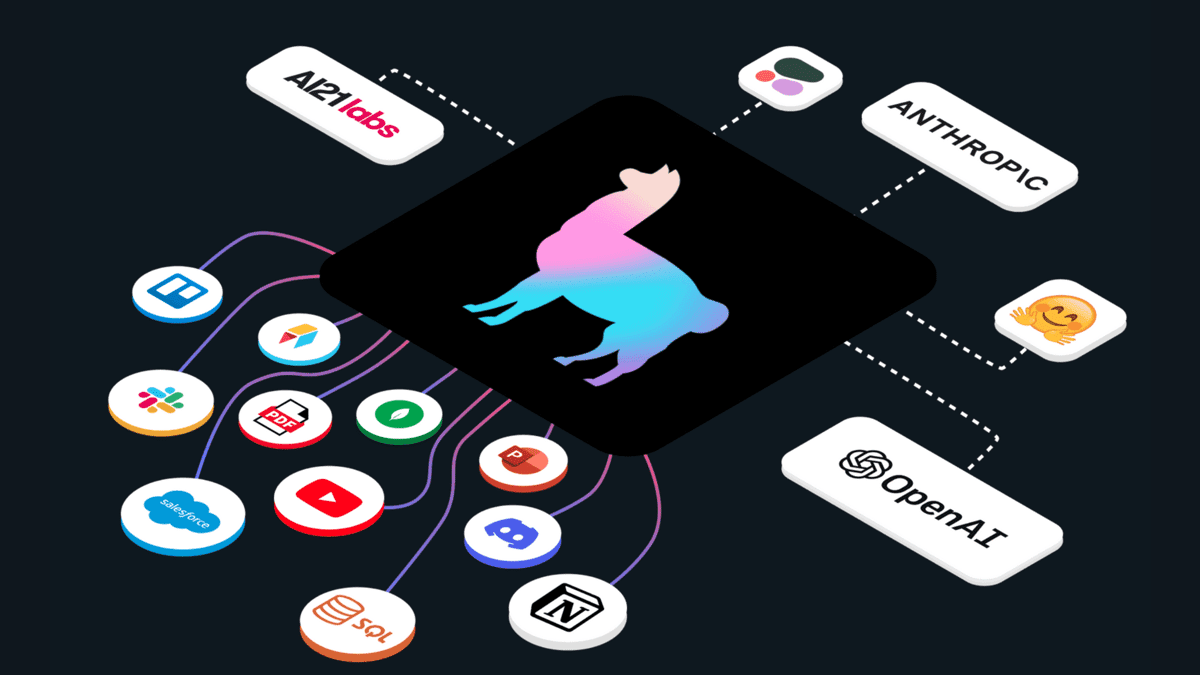

What’s new: LlamaIndex streamlines the coding involved in enabling developers to summarize, reason over, and otherwise manipulate data from documents, databases, and apps using models like GPT-4.

How it works: LlamaIndex is a free Python library that works with any large language model.

- Connectors convert various file types into text that a language model can read. Over 100 connectors are available for unstructured files like PDFs, raw text, video, and audio; structured sources like Excel or SQL files; or APIs for apps such as Salesforce or Slack.

- LlamaIndex divides the resulting text into chunks, embeds each chunk, and stores the embeddings in a database. Then users can call the language model to extract keywords, summarize, or answer questions about their data.

- Users can prompt the language model using a description such as, “Given our internal wiki, write a one-page onboarding document for new hires.” LlamaIndex embeds the query, retrieves the best-matching embedding from the database, and sends both to the language model. Users receive the language model's response; in this case, a one-page onboarding document.

Behind the news: Former Uber research scientist Jerry Liu began building LlamaIndex (originally GPT Index) in late 2022 and co-founded a company around it earlier this year. The company, which recently received $8.5 million in seed funding, plans to launch an enterprise version later this year.

Why it matters: Developing bespoke apps that use a large language model typically requires building custom programs to parse private databases. LlamaIndex offers a more direct route.

We’re thinking: Large language models are exciting new tools for developing AI applications. Libraries like LlamaIndex and LangChain provide glue code that makes building complex applications much easier — early entries in a growing suite of tools that promise to make LLMs even more useful.

Bug Finder

One challenge to making online education available worldwide is evaluating an immense volume of student work. Especially difficult is evaluating interactive computer programming assignments such as coding a game. A deep learning system automated the process by finding mistakes in completed assignments.

What’s new: Evan Zheran Liu and colleagues at Stanford proposed DreamGrader, a system that integrates reinforcement and supervised learning to identify errors (undesirable behaviors) in interactive computer programs and provide detailed information about where the problems lie.

Key insight: A reinforcement learning model can play a game, randomly at first, and — if it receives the proper rewards — learn to take actions that bring about an error. A classifier can learn to recognize that the error occurred, randomly at first, and reward the RL model when it triggers the error. In this scheme, training requires a small number of student submissions that have been labeled with a particular error that is known to occur. The two models learn in an alternating fashion: The RL model plays for a while and does or doesn’t bring about the error; the classifier classifies the RL model’s actions (that is, it applies the model’s label to actions that trigger the error and, if so, dispenses a reward), then the RL model plays more, and so on. By repeating this cycle, the classifier learns to recognize an error reliably.

How it works: DreamGrader was trained on a subset of 3,500 anonymized student responses to an assignment from the online educational platform Code.org. Students were asked to code Bounce, a game in which a single player moves a paddle along a horizontal axis to send a ball into a goal. The authors identified eight possible errors (such as the ball bouncing out of the goal after entering and no new ball being launched after a goal was scored) and labeled the examples accordingly. The system comprised two components for each type of error: (i) a player that played the game (a double dueling deep Q-network) and (ii) a classifier (an LSTM and vanilla neural network) that decided whether the error occurred.

- The player played the game for 100 steps, each comprising a video frame and associated paddle motion, or until the score exceeded 30. The model moved the paddle based on the gameplay’s “trajectory”: (i) current x and y coordinates of the paddle and ball, (ii) x and y velocities of the ball, and (iii) previous paddle movements, coordinates, ball velocities, and rewards.

- The player received a reward for bringing about an error, and it was trained to maximize its reward. To compute rewards, the system calculated the difference between the classification (error or no error) of the trajectory at the current and previous steps. In this way, the player received a reward only at the step in which the error occurred.

- The feedback classifier learned in a supervised manner.

- The authors repeated this process many times for each program to cover a wide variety of gameplay situations.

- At inference, DreamGrader ran each player-and-classifier pair on a program and output a list of errors it found.

Results: The authors evaluated DreamGrader on a test set of Code.org student submissions. For comparison, they modified the previous Play to Grade, which had been designed to identify error-free submissions, to predict the presence of a specific error. DreamGrader achieved 94.3 percent accuracy — 1.5 percent short of human-level performance — while Play to Grade achieved 75.5 percent accuracy. It evaluated student submissions in around 1 second each, 180 times faster than human-level performance.

Yes, but: DreamGrader finds only known errors. It can’t catch bugs that instructors haven’t already seen.

Why it matters: Each student submission can be considered a different, related task. The approach known as meta-RL aims to train an agent that can learn new tasks based on experience with related tasks. Connecting these two ideas, the authors trained their model following the learning techniques expressed in the meta-RL algorithm DREAM. Sometimes it’s not about reinventing the wheel, but reframing the problem as one we already know how to solve.

We’re thinking: Teaching people how to code empowers them to lead more fulfilling lives in the digital age, just as teaching them to read has opened doors to wisdom and skill since the invention of the printing press. Accomplishing this on a global scale requires automated systems for education (like Coursera!). It’s great to see AI research that could make these systems more effective.

Data Points

OpenAI sued over alleged violation of privacy

Several unnamed plaintiffs filed a lawsuit against the company, alleging that its use of information found on the internet to train its models constitutes offenses such as larceny, copyright infringement, and invasion of privacy. The plaintiffs seek class-action status. (San Francisco Chronicles)

The Vatican published a handbook on AI ethics

In partnership with Santa Clara University, the Holy See released a manual called “Ethics in the Age of Disruptive Technologies” that contains a strategic plan to enhance ethical management practices for technologies like AI. It’s available for free. (Gizmodo)

Executives signed a letter against the AI Act

Over 160 executives from companies including Meta and Renault warned that the proposed EU regulations would overly regulate AI, burdening developers with high compliance costs and disproportionate liability risks. (Reuters)

AI-generated sites garner advertising revenue

A report found that AI-generated websites are attracting ads served by automated online ad-placement services. Ads for over 140 brands have appeared on such sites, 90 percent of them served by Google. (MIT Technology Review)

Major League Baseball is scouting new players using AI

The league started converting players’ videos into metrics for teams to analyze during their scouting process. Uplift Labs analyzes images from a pair of iPhone cameras to forecast players’ potential and detect their flaws. (The Wall Street Journal)

U.S. to strengthen ban on AI chip sales

The Biden administration is considering tougher restrictions on exports of AI chips to China. An earlier decision banned sales of Nvidia A100 and H100 GPUs, and Nvidia developed A800 and H800 versions for the Chinese market.The new rules would restrict those chips, too, as well as similar products from AMD and Intel. (The New York Times)

Hollywood directors negotiate protection against AI

A union of directors ratified a three-year contract with film studios confirming that AI cannot replace their duties. Unions that represent screenwriters and actorsare in the process of negotiating similar agreements. (The New York Times)

Research: AI-generated images of child sexual abuse proliferateSince August, the volume of photorealistic AI-generated material depicting child sexual abuse circulating on the dark web has risen, a new study shows. Thhis type of material was generated mostly by open source applications developed and distributed with few controls. (The New York Times)